一、MCP 是什么?

Introduction - Model Context Protocol

MCP 是一种开放协议,用于规范应用程序如何向LLMs提供上下文。可以把 MCP 想象成 AI 应用的 USB-C 端口。

正如 USB-C 为您的设备连接各种外设和配件提供了一种标准化的方式,MCP 为人工智能模型连接不同的数据源和工具提供了一种标准化的方式。

二、构建过程

前置概念

项目架构

MCP Server

- Stdio 传输协议(本地)

- SSE 传输协议(远程)

MCP Client(客户端)

- 自建客户端(Python)

- Cursor

- Cline

MCP 传输协议

目前 MCP 支持两种主要的传输协议:

Stdio 传输协议

- 针对本地使用

- 需要在用户本地安装命令行工具

- 对运行环境有特定要求

SSE(Server-Sent Events)传输协议

- 针对云服务部署

- 基于 HTTP 长连接实现

环境配置

UV 环境

- 安装 uv

pip install uv- 初始化项目

# 项目目录

uv init mcp-server

# 创建、激活环境

uv venv

.venv\Scripts\activate

# 安装依赖

uv add "mcp[cli]" httpx

# 创建server文件

touch main.py构建工具函数

为了让大模型能访问市面上主流框架的技术文档,我们主要通过用户输入的 query,结合指定 site 特定域名的谷歌搜索进行搜索相关网页,并对相关网页进行解析提取网页文本并返回。

构建相关文档映射字典

docs_urls = {

"langchain": "python.langchain.com/docs",

"llama-index": "docs.llamaindex.ai/en/stable",

"autogen": "microsoft.github.io/autogen/stable",

"agno": "docs.agno.com",

"openai-agents-sdk": "openai.github.io/openai-agents-python",

"mcp-doc": "modelcontextprotocol.io",

"camel-ai": "docs.camel-ai.org",

"crew-ai": "docs.crewai.com"

}构建 MCP 工具

import json

import os

import httpx

from bs4 import BeautifulSoup

from mcp import tool

async def search_web(query: str) -> dict | None:

payload = json.dumps({"q": query, "num": 3})

headers = {

"X-API-KEY": os.getenv("SERPER_API_KEY"),

"Content-Type": "application/json",

}

async with httpx.AsyncClient() as client:

try:

response = await client.post(

SERPER_URL, headers=headers, data=payload, timeout=30.0

)

response.raise_for_status()

return response.json()

except httpx.TimeoutException:

return {"organic": []}

async def fetch_url(url: str):

async with httpx.AsyncClient() as client:

try:

response = await client.get(url, timeout=30.0)

soup = BeautifulSoup(response.text, "html.parser")

text = soup.get_text()

return text

except httpx.TimeoutException:

return "Timeout error"

@tool()

async def get_docs(query: str, library: str):

"""

搜索给定查询和库的最新文档。

支持 langchain、llama-index、autogen、agno、openai-agents-sdk、mcp-doc、camel-ai 和 crew-ai。

参数:

query: 要搜索的查询 (例如 "React Agent")

library: 要搜索的库 (例如 "agno")

返回:

文档中的文本

"""

if library not in docs_urls:

raise ValueError(f"Library {library} not supported by this tool")

query = f"site:{docs_urls[library]} {query}"

results = await search_web(query)

if len(results["organic"]) == 0:

return "No results found"

text = ""

for result in results["organic"]:

text += await fetch_url(result["link"])

return text封装 MCP Server (基于 Stdio 协议)

MCP Server (Stdio)

# main.py

from mcp.server.fastmcp import FastMCP

from dotenv import load_dotenv

import httpx

import json

import os

from bs4 import BeautifulSoup

from typing import Any

import httpx

from mcp.server.fastmcp import FastMCP

from starlette.applications import Starlette

from mcp.server.sse import SseServerTransport

from starlette.requests import Request

from starlette.routing import Mount, Route

from mcp.server import Server

import uvicorn

load_dotenv()

mcp = FastMCP("Agentdocs")

USER_AGENT = "Agentdocs-app/1.0"

SERPER_URL = "https://google.serper.dev/search"

docs_urls = {

"langchain": "python.langchain.com/docs",

"llama-index": "docs.llamaindex.ai/en/stable",

"autogen": "microsoft.github.io/autogen/stable",

"agno": "docs.agno.com",

"openai-agents-sdk": "openai.github.io/openai-agents-python",

"mcp-doc": "modelcontextprotocol.io",

"camel-ai": "docs.camel-ai.org",

"crew-ai": "docs.crewai.com"

}

async def search_web(query: str) -> dict | None:

payload = json.dumps({"q": query, "num": 2})

headers = {

"X-API-KEY": os.getenv("SERPER_API_KEY"),

"Content-Type": "application/json",

}

async with httpx.AsyncClient() as client:

try:

response = await client.post(

SERPER_URL, headers=headers, data=payload, timeout=30.0

)

response.raise_for_status()

return response.json()

except httpx.TimeoutException:

return {"organic": []}

async def fetch_url(url: str):

async with httpx.AsyncClient() as client:

try:

response = await client.get(url, timeout=30.0)

soup = BeautifulSoup(response.text, "html.parser")

text = soup.get_text()

return text

except httpx.TimeoutException:

return "Timeout error"

@mcp.tool()

async def get_docs(query: str, library: str):

"""

搜索给定查询和库的最新文档。

支持 langchain、llama-index、autogen、agno、openai-agents-sdk、mcp-doc、camel-ai 和 crew-ai。

参数:

query: 要搜索的查询 (例如 "React Agent")

library: 要搜索的库 (例如 "agno")

返回:

文档中的文本

"""

if library not in docs_urls:

raise ValueError(f"Library {library} not supported by this tool")

query = f"site:{docs_urls[library]} {query}"

results = await search_web(query)

if len(results["organic"]) == 0:

return "No results found"

text = ""

for result in results["organic"]:

text += await fetch_url(result["link"])

return text

if __name__ == "__main__":

mcp.run(transport="stdio")

- 安装依赖

uv add beautifulsoup4- 启动命令///

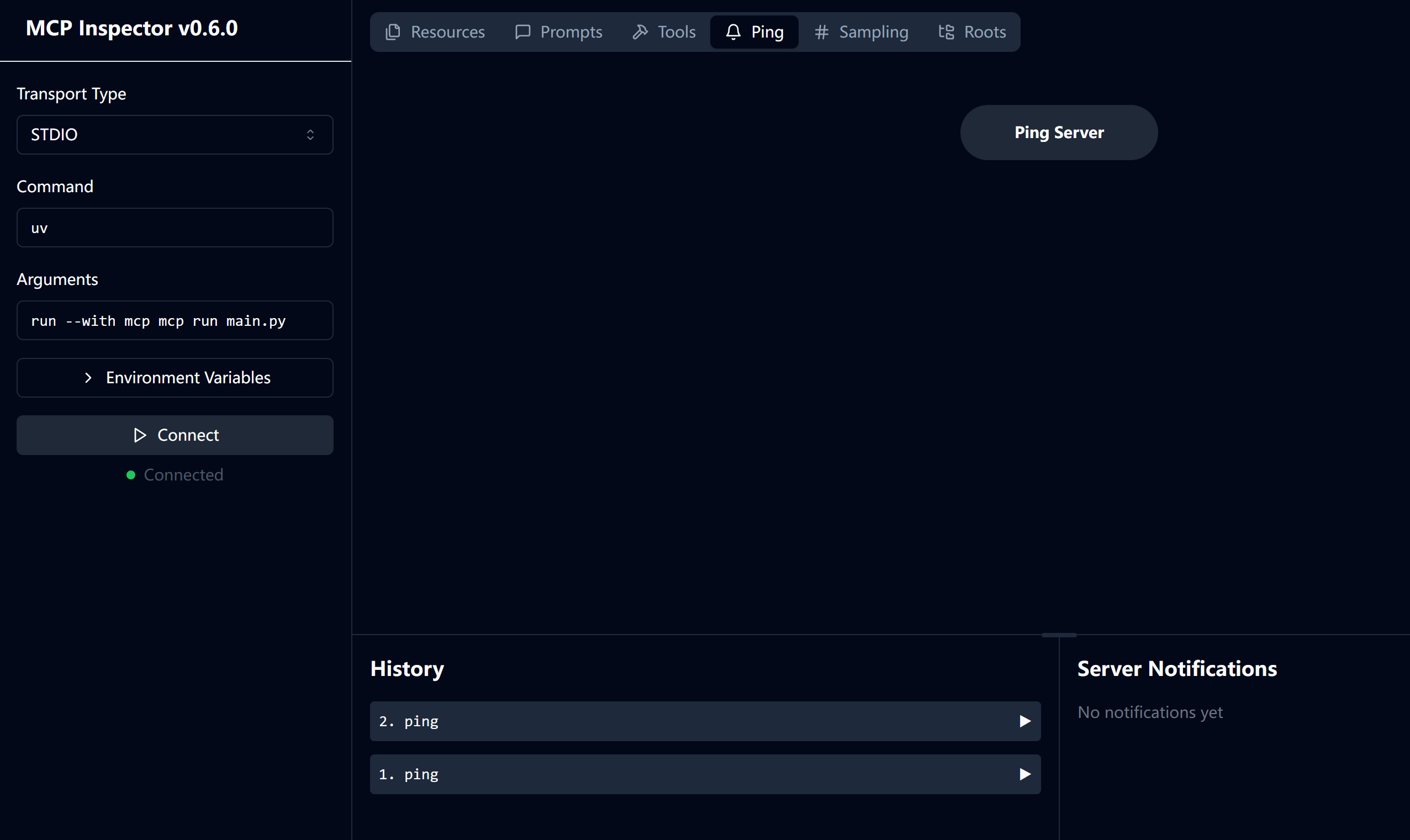

uv run main.py- 使用 MCP Inspector 进行调试

mcp dev main.py- Connect

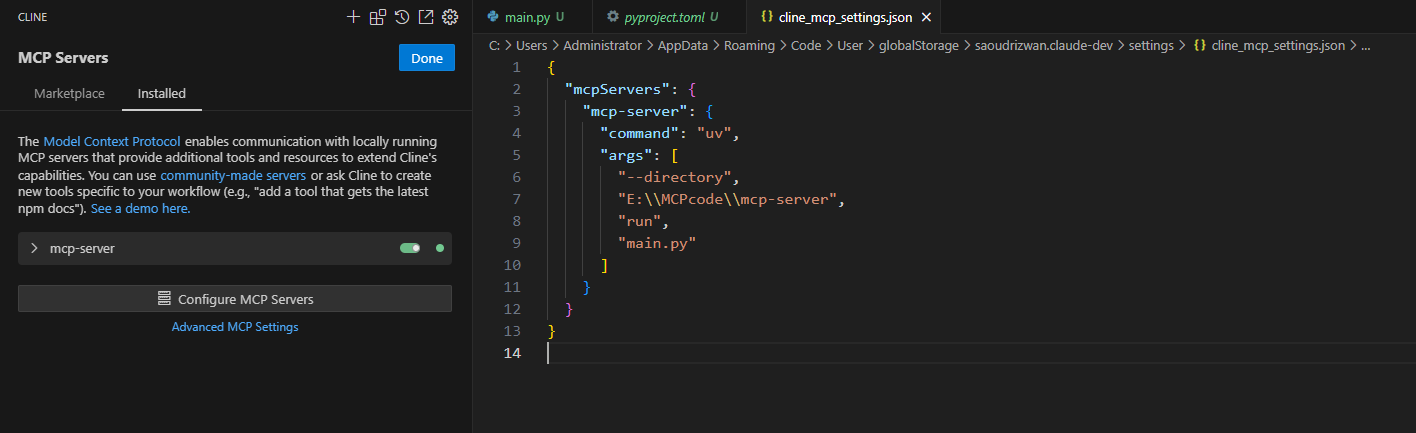

客户端配置(基于 Cline)

- Visual Studio Code 安装 Cline 插件,然后进行配置 MCP

{

"mcpServers": {

"mcp-server": {

"command": "uv",

"args": [

"--directory",

"<你的项目路径>",

"run",

"main.py"

]

}

}

}如:

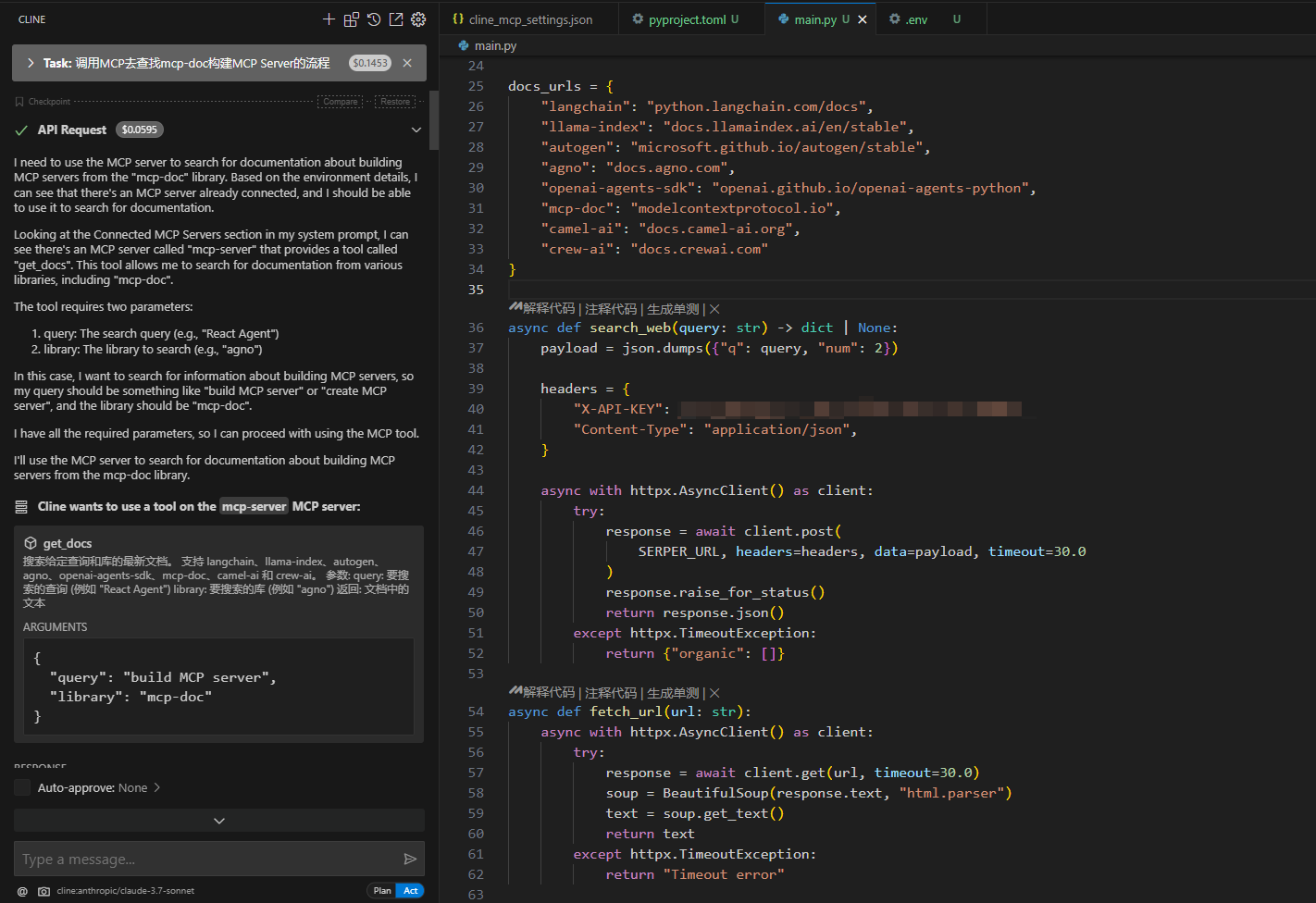

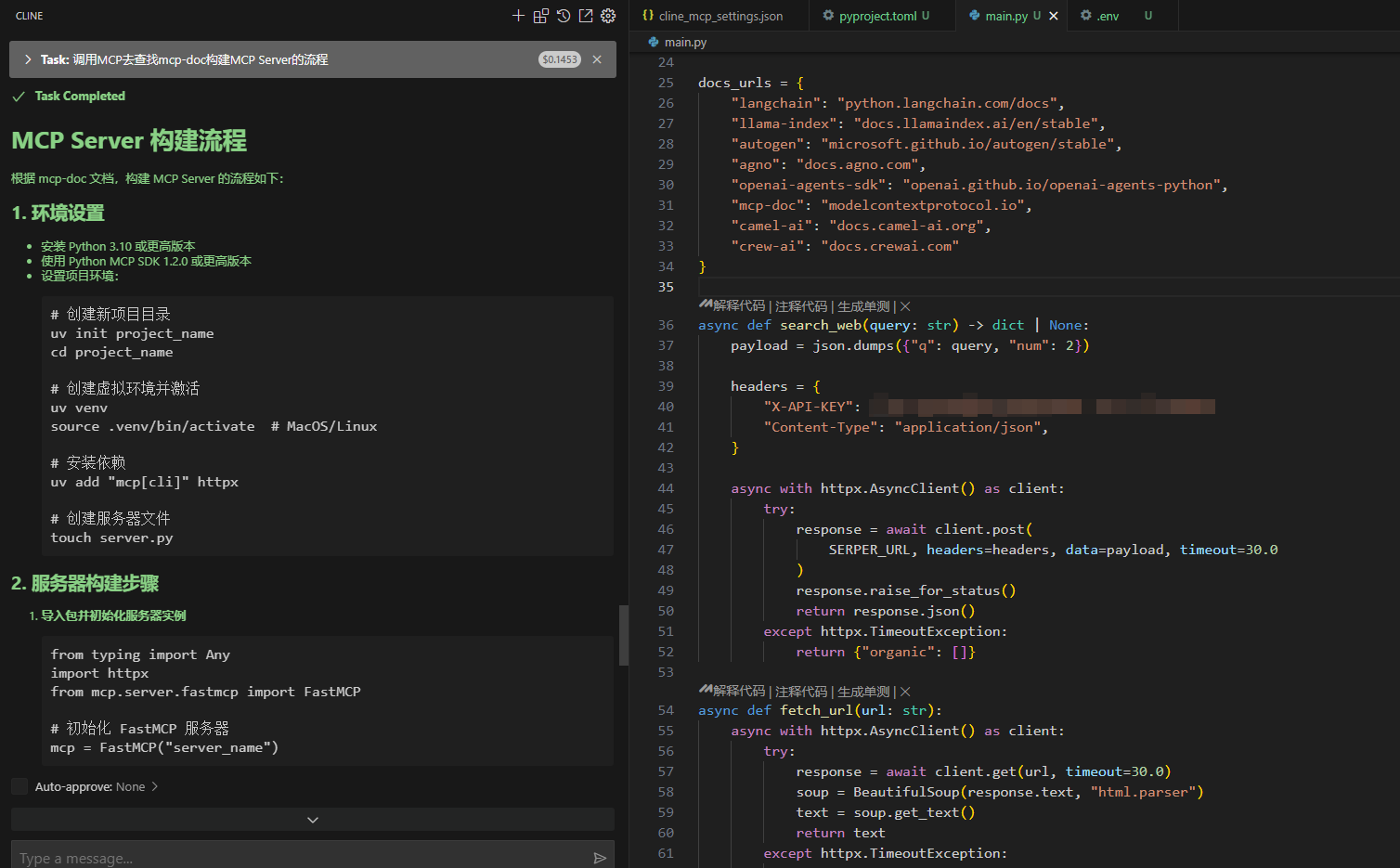

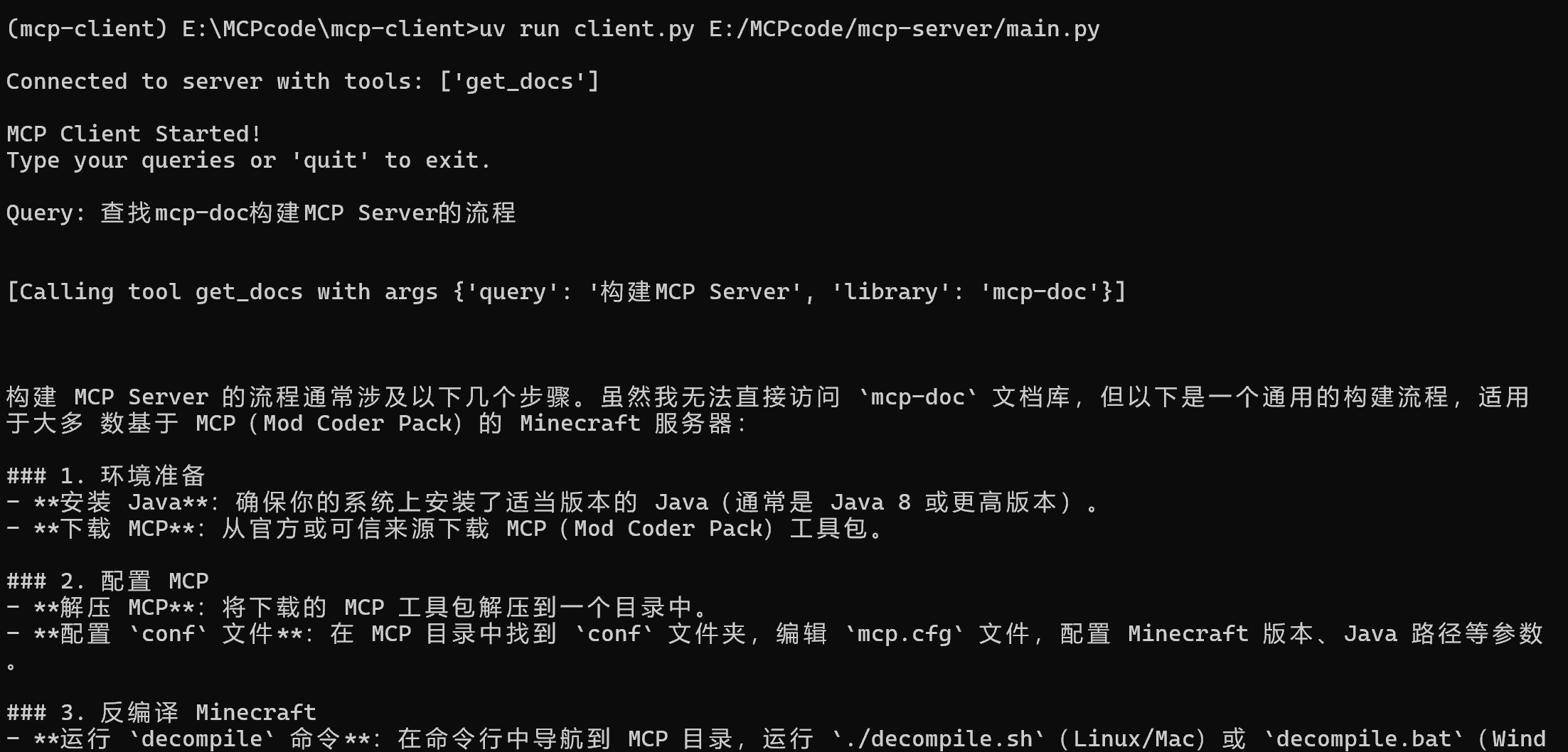

使用 MCP 提问

问题:调用MCP去查找mcp-doc构建MCP Server的流程

构建 SSE MCP Server (基于 SSE 协议)

封装 MCP Server

from mcp.server.fastmcp import FastMCP

from dotenv import load_dotenv

import httpx

import json

import os

from bs4 import BeautifulSoup

from typing import Any

import httpx

from mcp.server.fastmcp import FastMCP

from starlette.applications import Starlette

from mcp.server.sse import SseServerTransport

from starlette.requests import Request

from starlette.routing import Mount, Route

from mcp.server import Server

import uvicorn

load_dotenv()

mcp = FastMCP("docs")

USER_AGENT = "docs-app/1.0"

SERPER_URL = "https://google.serper.dev/search"

docs_urls = {

"langchain": "python.langchain.com/docs",

"llama-index": "docs.llamaindex.ai/en/stable",

"autogen": "microsoft.github.io/autogen/stable",

"agno": "docs.agno.com",

"openai-agents-sdk": "openai.github.io/openai-agents-python",

"mcp-doc": "modelcontextprotocol.io",

"camel-ai": "docs.camel-ai.org",

"crew-ai": "docs.crewai.com"

}

async def search_web(query: str) -> dict | None:

payload = json.dumps({"q": query, "num": 2})

headers = {

"X-API-KEY": os.getenv("SERPER_API_KEY"),

"Content-Type": "application/json",

}

async with httpx.AsyncClient() as client:

try:

response = await client.post(

SERPER_URL, headers=headers, data=payload, timeout=30.0

)

response.raise_for_status()

return response.json()

except httpx.TimeoutException:

return {"organic": []}

async def fetch_url(url: str):

async with httpx.AsyncClient() as client:

try:

response = await client.get(url, timeout=30.0)

soup = BeautifulSoup(response.text, "html.parser")

text = soup.get_text()

return text

except httpx.TimeoutException:

return "Timeout error"

@mcp.tool()

async def get_docs(query: str, library: str):

"""

搜索给定查询和库的最新文档。

支持 langchain、llama-index、autogen、agno、openai-agents-sdk、mcp-doc、camel-ai 和 crew-ai。

参数:

query: 要搜索的查询 (例如 "React Agent")

library: 要搜索的库 (例如 "agno")

返回:

文档中的文本

"""

if library not in docs_urls:

raise ValueError(f"Library {library} not supported by this tool")

query = f"site:{docs_urls[library]} {query}"

results = await search_web(query)

if len(results["organic"]) == 0:

return "No results found"

text = ""

for result in results["organic"]:

text += await fetch_url(result["link"])

return text

## sse传输

def create_starlette_app(mcp_server: Server, *, debug: bool = False) -> Starlette:

"""Create a Starlette application that can serve the provided mcp server with SSE."""

sse = SseServerTransport("/messages/")

async def handle_sse(request: Request) -> None:

async with sse.connect_sse(

request.scope,

request.receive,

request._send, # noqa: SLF001

) as (read_stream, write_stream):

await mcp_server.run(

read_stream,

write_stream,

mcp_server.create_initialization_options(),

)

return Starlette(

debug=debug,

routes=[

Route("/sse", endpoint=handle_sse),

Mount("/messages/", app=sse.handle_post_message),

],

)

if __name__ == "__main__":

mcp_server = mcp._mcp_server

import argparse

parser = argparse.ArgumentParser(description='Run MCP SSE-based server')

parser.add_argument('--host', default='0.0.0.0', help='Host to bind to')

parser.add_argument('--port', type=int, default=8020, help='Port to listen on')

args = parser.parse_args()

# Bind SSE request handling to MCP server

starlette_app = create_starlette_app(mcp_server, debug=True)

uvicorn.run(starlette_app, host=args.host, port=args.port)构建 MCP Client

在体验Cline 作为 Client 之后,为了更加灵活的项目扩展,可以自行搭建 MCP Client,这里采用 DeepSeek 作为 LLM API

使用 uv 创建一个 Python 项目作为 MCP Client

# 创建项目目录

uv init mcp-client

cd mcp-client

# 创建虚拟环境

uv venv

# 激活虚拟环境

.venv\Scripts\activate

# 安装所需包

uv add mcp anthropic python-dotenv创建客户端

# 安装所需包

uv add OpenAI设置 DeepSeek API 密钥

DeepSeek Platform 获取 API key,在代码中写入,推荐使用环境变量读取。

def __init__(self):

# Initialize session and client objects

self.session: Optional[ClientSession] = None

self.exit_stack = AsyncExitStack()

self.deepseek_api_key = "DeepSeek API Key"

self.base_url = "https://api.deepseek.com/v1"

self.model = "deepseek-chat"

self.client = OpenAI(api_key=self.deepseek_api_key, base_url=self.base_url)

self.session: Optional[ClientSession] = None

self.exit_stack = AsyncExitStack()uv Server配置

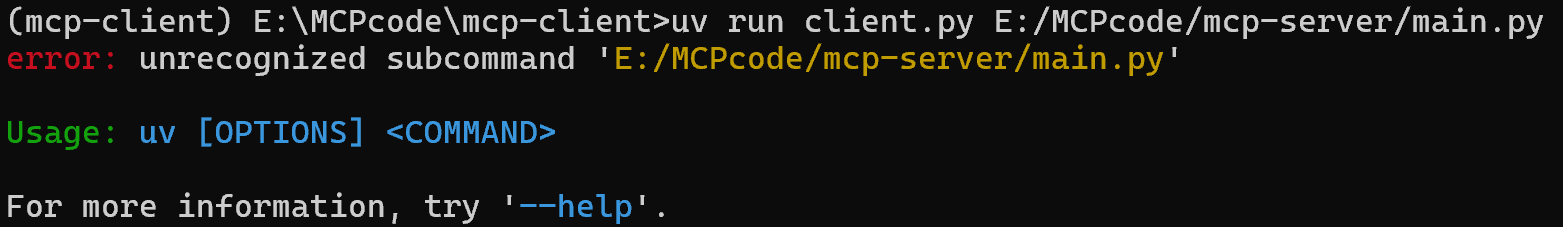

如遇到以下错误:

可通过添加 uv Server配置信息解决:

# 此处添加Server参数

server_params = StdioServerParameters(

command="uv",

args=["run", "../mcp-server/main.py"],

env=None

)启动 Client

uv run client.py E:/MCPcode/mcp-server/main.py输入 Query,获得 tools 列表,执行 MCP tool,大模型响应结果

完整 Client 代码

import asyncio

import json

from typing import Optional

from contextlib import AsyncExitStack

from openai import OpenAI

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from dotenv import load_dotenv

# 导入 httpx

import httpx

load_dotenv() # load environment variables from .env

class MCPClient:

def __init__(self):

# Initialize session and client objects

self.session: Optional[ClientSession] = None

self.exit_stack = AsyncExitStack()

self.deepseek_api_key = "DeepSeek API Key"

self.base_url = "https://api.deepseek.com/v1"

self.model = "deepseek-chat"

self.client = OpenAI(api_key=self.deepseek_api_key, base_url=self.base_url)

self.session: Optional[ClientSession] = None

self.exit_stack = AsyncExitStack()

async def connect_to_server(self, server_script_path: str):

"""Connect to an MCP server

Args:

server_script_path: Path to the server script (.py or .js)

"""

is_python = server_script_path.endswith('.py')

is_js = server_script_path.endswith('.js')

if not (is_python or is_js):

raise ValueError("Server script must be a .py or .js file")

command = "python" if is_python else "node"

# 此处添加Server参数

server_params = StdioServerParameters(

command="uv",

args=["run", "你的MCP server脚本路径"],

env=None

)

stdio_transport = await self.exit_stack.enter_async_context(stdio_client(server_params))

self.stdio, self.write = stdio_transport

self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write))

await self.session.initialize()

# List available tools

response = await self.session.list_tools()

tools = response.tools

print("\nConnected to server with tools:", [tool.name for tool in tools])

async def process_query(self, query: str) -> str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "user",

"content": query

}

]

response = await self.session.list_tools()

available_tools = [{

"type": "function",

"function": {

"name": tool.name,

"description": tool.description,

"input_schema": tool.inputSchema

}

} for tool in response.tools]

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

# 处理返回的内容

content = response.choices[0]

if content.finish_reason == "tool_calls":

# 如何是需要使用工具,就解析工具

tool_call = content.message.tool_calls[0]

tool_name = tool_call.function.name

tool_args = json.loads(tool_call.function.arguments)

# 执行工具

result = await self.session.call_tool(tool_name, tool_args)

print(f"\n\n[Calling tool {tool_name} with args {tool_args}]\n\n")

# 将模型返回的调用哪个工具数据和工具执行完成后的数据都存入messages中

messages.append(content.message.model_dump())

messages.append({

"role": "tool",

"content": result.content[0].text,

"tool_call_id": tool_call.id,

})

# 将上面的结果再返回给大模型用于生产最终的结果

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

)

return response.choices[0].message.content

return content.message.content

async def chat_loop(self):

"""Run an interactive chat loop"""

print("\nMCP Client Started!")

print("Type your queries or 'quit' to exit.")

while True:

try:

query = input("\nQuery: ").strip()

if query.lower() == 'quit':

break

response = await self.process_query(query)

print("\n" + response)

except Exception as e:

print(f"\nError: {str(e)}")

async def cleanup(self):

"""Clean up resources"""

await self.exit_stack.aclose()

async def main():

if len(sys.argv) < 2:

print("Usage: python client.py <path_to_server_script>")

sys.exit(1)

client = MCPClient()

try:

await client.connect_to_server(sys.argv[1])

await client.chat_loop()

finally:

await client.cleanup()

if __name__ == "__main__":

import sys

asyncio.run(main())三、对于 MCP 的一些思考

MCP 的优势是明显的,不限制于特定的 AI 模型,可以在任何支持 MCP 的模型上灵活切换,极大程度弥补了 Function Call 对于模型支持的依赖程度,作为开发者可以专注于 MCP Client or Server 的开发。MCP 结合 Agent、Function Call,应该可以在 AI 赋能业务的场景中有更加精彩的表现!

四、参考资料

https://gist.github.com/zckly/f3f28ea731e096e53b39b47bf0a2d4b1

MCP Python client fails to load the server · Issue #291 · modelcontextprotocol/python-sdk